Projects

Solo

AI Hackathon Spring '21

Team

Scion

AI Hackathon Fall '21

Pico

Pokemon Stadium AI

Misc Stuff

Scripts

Arduino Projects

Factorio

Pathfinder 1E

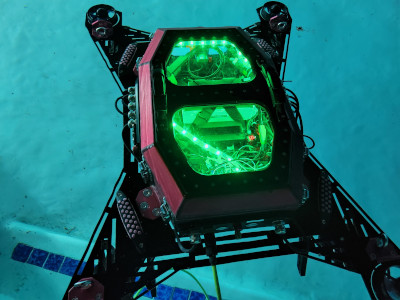

After Pico's development was completed with the SDSU Mechatronics team, I began working with the team on completing Scion for an in-person Robosub competition during the summer of 2022. This was to be our first brand new robot for in-person robosub since 2017 and I was placed in charge of the programming team.

Design Specification

ROS

Due to my previous experience working on Pico I had a good understanding on what worked and what didn't. I also had the advice from other previous team members. Historically we had difficulty on deciding what to use to talk between different programs. Originally everything ran on a single process, eventually trying out reinventing ROS as MechOS, finally leading to Pico's Docker container solution. This time we decided from the get-go we were going to use ROS (Robot Operating System) Noetic as our solution for communicating between programs, as it was the industry standard for this and it worked well.

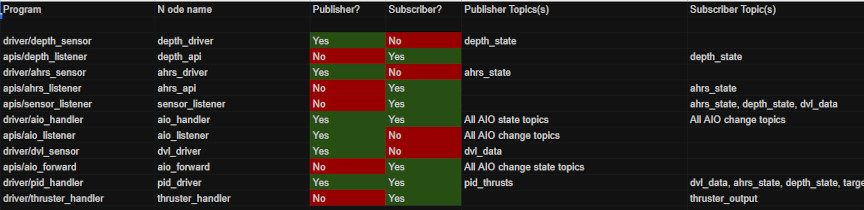

ROS is a meta-operating system for robots that works across several programming languages but our main use case here would be in Python. It works using a publisher/subscriber model of data, many programs can listen to a single publisher and recieve data. There can be many different programs running on a computer at once that may need varying data of different types so designing with ROS in mind from the get-go was crucial. I made the above spreadsheet for organizing our ROS topics, which are the names of these channels the publishers and subscribers will "talk" on.

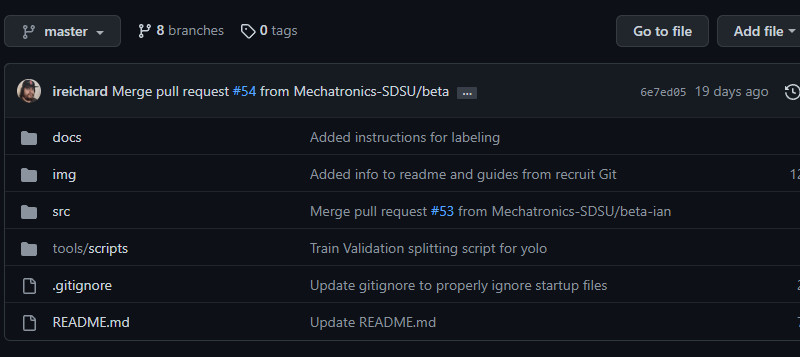

Git

Git is the go-to software source control program in the realm of software development and it was our choice for developing our robotics code here on Scion. Git allows us to work on many different features on our own and then combine our "timelines" of the code together. This can lead to what's called a "merge conflict" if the same code was modified by 2 different people, but it is a worthy trade off for what Git provides otherwise.

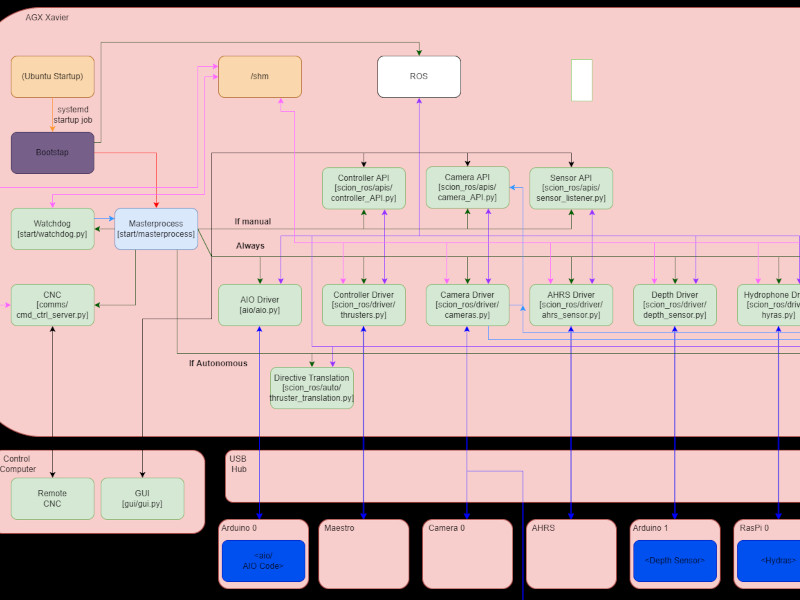

Data Intercommunication

Since we're doing a ROS-heavy system, we need to be clear not only on ROS topics but every data pipeline that isn't simply just on a ROS topic, since ROS can't handle every type of data we were going to use. Here was a later revision of our data pipeline. Whenever we added a new program, its connection to the greater whole was added to this chart by myself. I was big on documentation for this project and wanted to make sure not only each program was well documented within the program, but its use within the larger whole of the software system would be understood to others as well.

Python

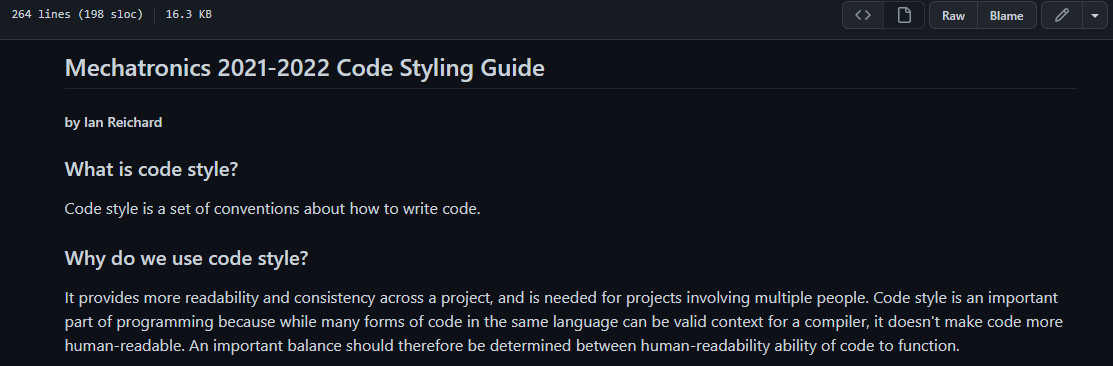

Before writing any Python I ensured everyone would be on the same page for code development. One of the first things I did was write a style guide that would guide all python code development and ensure readability of code from members present and future.

A style guide is not good enough on its own, however, and I made sure it was enforced on pull requests.

Development

Teamwork

The above picture was our whole team at the competition after we gave our presentation. Most of the programming team had left by this time and there were only 3 programmers in the final sprint up to competition, including our team president. Despite this, we had plenty of talent earlier on in the semester who I lead using industry standard practices.

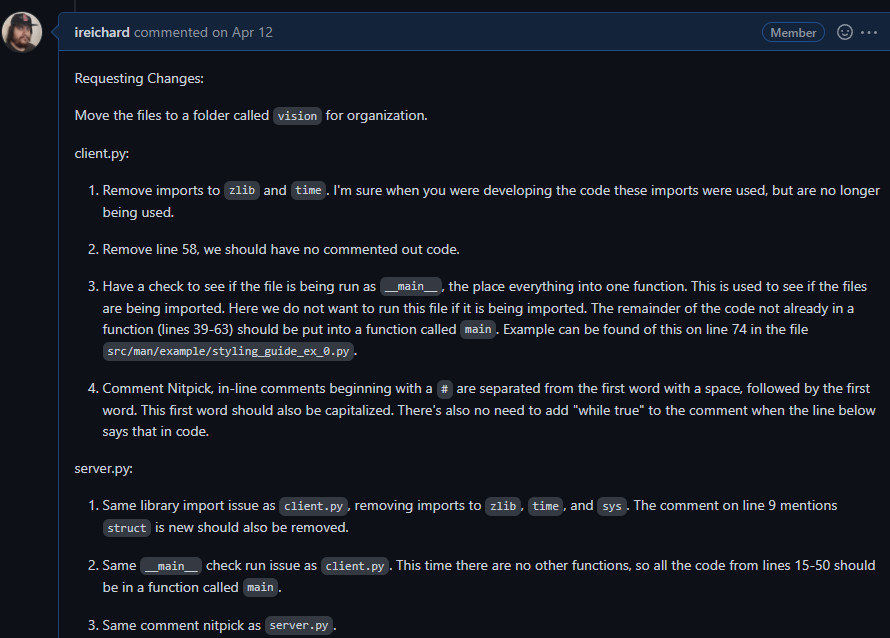

Scrum/Agile

Developing software when you expect requirements to change (like in a robot where many engineers are involved) is common, because we live in the real world where changes can happen. This is why we used a kanban board with time-based deadlines such as in the agile method of software development. In agile you have a continuous development cycle where major features are not planned well in advance but are added in smaller "sprints". This eventually culminates in a project being able to be finished despite changes that some up-front planning cannot account for, and is one of the major industry standards nowadays for software development.

Personal Contributions

In addition to my leadership and being in charge of integration, I personally contributed to the development of many software features on the robot. Here are the most important ones that I wrote myself.

Masterprocess

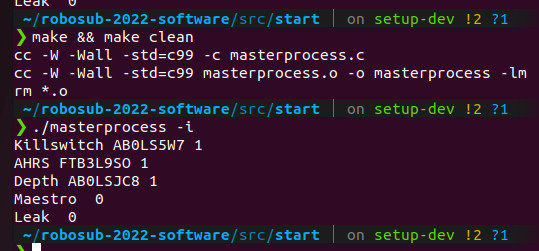

The masterprocess was a C program that could call the Linux kernel and handle piping and forking properly to new processes that it would start up. Initially there were many features planned for it which necessitated it be written in C but many of those were scrapped or offloaded. The reason this was necessary was there were several different use cases for running our robot we wanted to use, so having one unifying program which could start up everything we needed and ONLY what we needed would be helpful. It was my biggest pride and accomplishment on Scion.

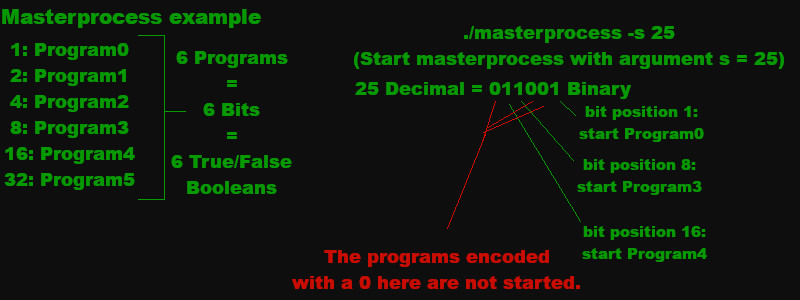

The masterprocess has a variety of arguments (aka parameters) you can provide to it when it is started up. When provided the s argument, it will start whatever program integer you provide after that. The program integer was calculated by taking the powers of 2 of the s argument, where each bit in the integer in binary corresponds to a program to start or to not start. This allows us to use a single unsigned 32 bit integer (4 bytes total) to start up 32 programs, instead of needing to store 32 booleans in 8 bits of memory each (32 bytes total) or make a custom bitfield in C (4 bytes total with extra complexity).

The example above demonstrates how the masterprocess would start up programs given the argument 25.

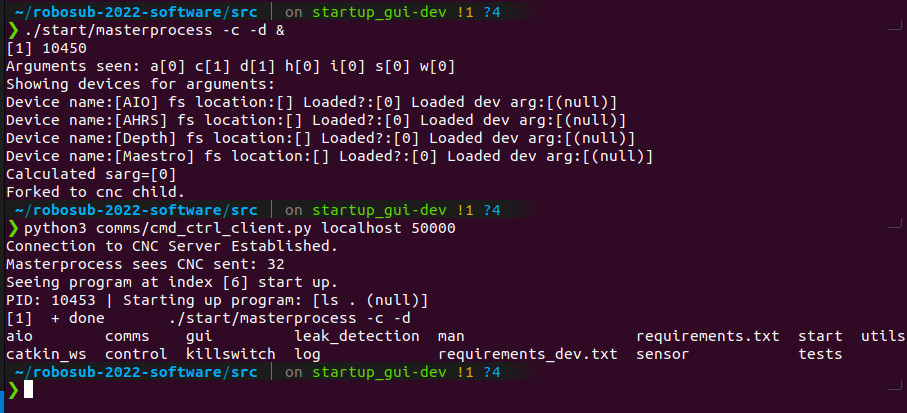

While these arguments initially had to be added to the masterprocess manually, we quickly added new arguments that could be provided to the masterprocess to start up certain groups of programs, such as the APIs for the GUI or only the ROS related ones. We went beyond hard coding it and I even wrote a Command and Control server which could remotely send the masterprocess an s argument at runtime from a program the masterprocess itself created.

This is what is actually being demonstrated in the image above. The c argument is passed which creates the command and control server with a fork operation, then the masterprocess waits for an external program like the GUI to tell the masterprocess what to start up. In this image the Command and Control server returns a 32, which for testing purposes would just run the ls command in the current drectory.

GUI and ROS APIs

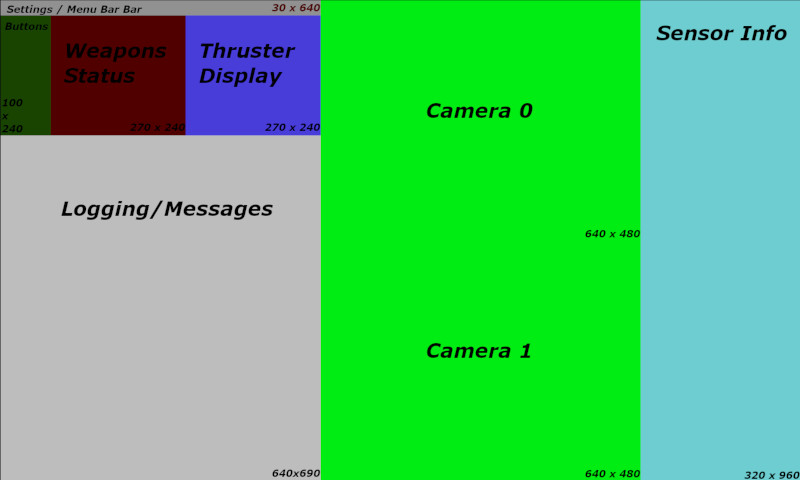

The GUI for Scion was rewritten from the ground up using libraries similar to what I had used for Pico's GUI. I ended up cutting the lines of code needed by over 60% (746 on Scion, 2149 on Pico) for similar functionality. Scion's GUI had some new requirements, such as being able to integrate with ROS and displaying 2 cameras. Instead of taking the approach I did with Pico with setting up many different UNIX pipes, I wrote shared memory objects using Python's multiprocessing module to use as little resources as possible. The above image was the earliest prototype showing how the subwindows would be split.

My ROS integration solution was fairly simple. Since any program can listen to any ROS topic, I wrote dedicated "listener" programs which would listen to whatever data the GUI needed from that particular program, package it using struct, and send it over a network connection.

Mission Planner

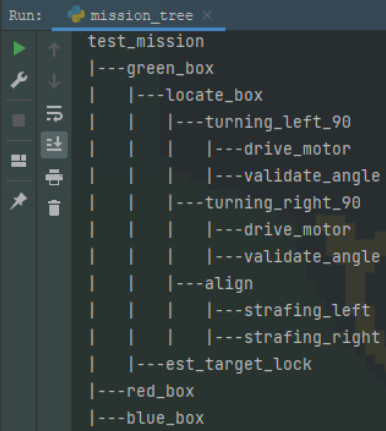

The mission planner was our method for iterating through directives the robot would go through in its AI. We could plan out a mission in the abstract, human readable way using the mission planner, and the AI would also have directives it could parse in the mission planner such as "move forward until sensor says x". What's being shown in the above image is a visual representation of a test mission, done in a tree data structure.

The AI itself (called the heuristics program) was a Mealy model which would use the mission planner and its current state with its previous state to determine the next output state. The AI knowing the current state is important in robotics to accurately drive result output.

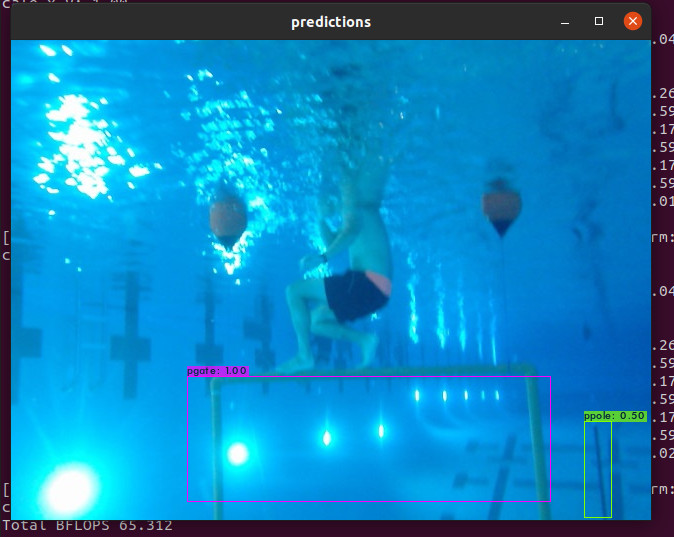

Machine Learning

The most difficult part of the project was getting machine learning working for object recognition. We had an ARM computer, the Nvidia AGX Xavier, which has its own custom libraries and pipelines for image recognition. The above screenshot was us testing a custom yolov3 model on our x86 development computer, which unfortunately didn't translate onto the robot's computer well. After experimenting with other networks, we eventually got yolov4 working at the last minute.

Results

Testing

Starting in early May, we began going to the pool at SDSU to test our robot's capabilities. We began by testing thrusters on their own, then adding cameras, thena dding sensors, finally testing integration with all control systems and machine learning. We were testing up until the day before our flight to Maryland for the competition, and spent over 100 hours total at the pool.

We wrote some unit tests for our code, but the main thing we were caught up in was integration and putting things together. Building more generic and better defined interfaces would have been the best way to do this and fix the problem.

Conclusions

While we did not win the competition, I certainly learned much. There were many issues I had no control over, like our main computer dying from a 12V plug going into a 5V connector, and subpar integration from other teams, I still did my best to make the robot work and we did end up qualifying using my code in the end. This was certainly the hardest team project I've ever worked on or led, but that doesn't mean I didn't enjoy working with others for it.