Projects

Solo

AI Hackathon Spring '21

Team

Scion

AI Hackathon Fall '21

Pico

Pokemon Stadium AI

Misc Stuff

Scripts

Arduino Projects

Factorio

Pathfinder 1E

When I joined SDSU's Mechatronics team in 2020, we built a low-budget autonomous submarine for the 2021 Robosub Competition while coordinating fully online with our multidisciplinary team. Building online would prove to have several challenges and this was my first exposure to online teamwork, especially in a multidisciplinary setting with many individuals with different backgrounds. This robot's name would eventually become "Pico" to reference its small size.

Design Specification

For our own team's restrictions, we were encouraged to keep the price as low as possible for a small robot that could navigate through a bathtub-size pool of water. For the code on this robot our choice of computer was obvious, the ubiquitous low power ARM board, the Raspberry Pi 4. This would limit our computing power but to keep the price as low as possible while having basic functionality we decided there was no better option. We opted for the midrange 4GB model which gave us a lot of breathing room for program space at runtime. The I/O was excellent with support for several different types of cameras and anything we wanted to use the General Purpose I/O pins (GPIO) for. The computer choice would be one of the most important design decisions we made and everything else would later be built around it.

With our computer selected, we began studying up on software solutions for deploying our systems. Our lead at the time named Christian was working on containerization technology at his job and decided we would use a containerized system with a custom message protocol to talk between our containers. To the uninitiated, this will isolate entire subsystems on a computer together in logical partitions, similar to a virtual machine. This had several advantages to simply running things on the host operating system such as the ability to restart entire systems by restarting containers, rather than restarting multiple programs manually. Our container technology of choice was Docker, one of the most popular containerization solutions. To pass messages between our Docker containers, we opted to use GRPC, a remote procedure calling system which will allow us to pass messages between containers.

From there we began segmenting tasks for the robot. I would end up personally working on much of the integration as the integration project manager and would write APIs for our test GUI to control elements of the robot. My job, basically, was to put many of these systems together, along with writing the code to give access to them.

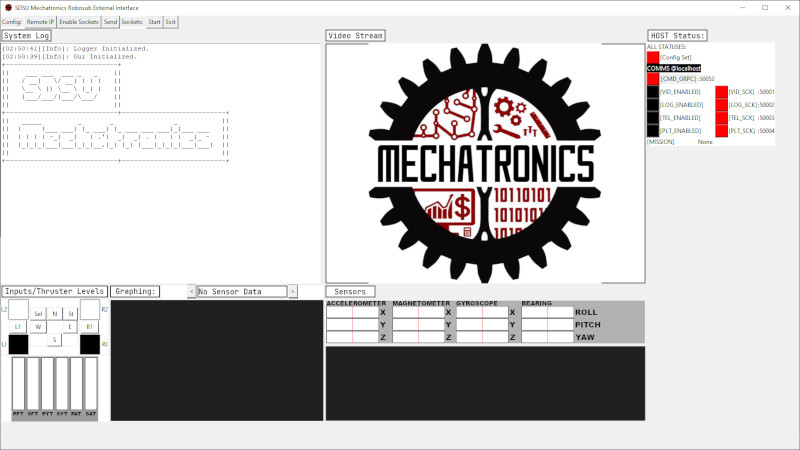

GUI (Graphical User Interface)

Pico was not my introduction to making graphical user interfaces. I had already written a GUI for the Pokemon Stadium AI, but this would need to be a step up from rendering some text and static images in pygame. Not only would this need to dynamically render elements like a live camera feed, but it would need to accept input from the user and send that to the robot, along with recieving and displaying input from the robot.

My solution was to use a library called tkinter built in to python 3. Tkinter's grid system for laying out parts of the GUI was quite solid and it would work for my purposes. More importantly, I ended up using python's multiprocessing library. The GUI to actually display and render things would run on one CPU thread because the update function for it had to be in the same program as the renderer, but anything that wasn't just rendering or waiting for user input would be in different programs. This was crucial and allowed me to write code that wouldn't cause the update function to wait for other function calls and allowed me to make the GUI as responsive as possible. For example, the GUI would need to render images from the camera into the GUI so anyone looking at the GUI could see the image. The networking program for these images was running in its own process and sending the images it recieved over a UNIX pipe, which is a logical space in memory where one program can write to and another program can read from. All the GUI would do in its update function is check to see if a new image was in this pipe and, if there was an image, load it into the GUI. The heavier processing work was largely done by the networking program to prepare the image. This design philosophy was also used elsewhere in the GUI to prepare certain elements for rendering.

Communications Status

To see when we had an active connection to certain subsystems, it was necessary to create a visualization in the GUI to show what was connected. The original version of this would be a tkinter button that changed colors. I eventually used opencv to render new text in a different color for better visual feedback. Green means a connection to the robot's container for that program is established, red means it is terminated or uninitiated.

Thruster Visualization

Pico had 6 thrusters, 2 of which were "Y" thrusters which moved it forward, backward, with turning, and 4 "Z" thrusters which moved it up and down in the water to dive deeper or surface. This control scheme was mapped to the GUI for testing purposes with what I had at the time: an Xbox One controller. The top part of the above gif is the physical inputs into the controller, translated through pygame's controller library. The bottom half was the translated thruster outputs mapped onto the thrusters directly. These have direction as well and can reverse direction.

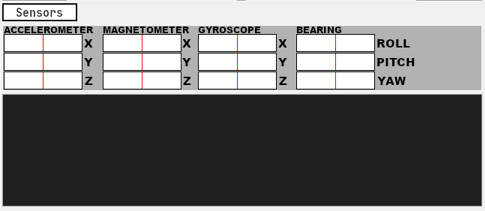

Sensor Display

Sensor output would be displayed here in real time. Bars were created to represent the accelerometers, gyroscopes, and magnetometers along with calculated roll, pitch, and yaw to give better visuals. There were a variety of sensors that went into the bottom bar such as a battery voltmeter and an anmeter to keep track of battery status.

Docker

As mentioned earlier, we used Docker to segment logical partitions of programs. On my development machine I made an Ubuntu VM to run docker and validate code would work on the Linux end, then had a test Pi I would move code over to for validation. Docker proved to be both a useful asset to work with yet also quite difficult to learn for the first time. Not only did Docker need to work but our RPC client for passing messages needed to as well.

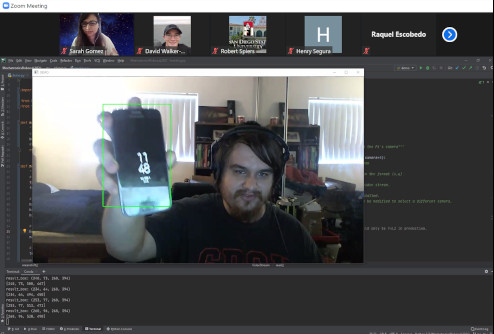

Image Recognition

I wrote the image parser pipeline, but I didn't train the model for this robot, as one of my colleagues had taken over that. I ended up writing the code that would render predictions onto images and pass them along to the GUI because it was more involved with the integration of systems.

Unused elements

This was supposed to be a realtime graph renderer which displayed data as it came in, but was cut due to time restrictions and lack of necessity with our logging program. The data loaded into the graph would successfully display but the update function was not fully optimized.

Conclusion

In hindsight the GUI could have been handled more efficiently using smaller shared memory blocks. Using Docker and a custom RPC message also did some wheel reinventing that was somewhat unnecessary in the long run and was forced. This is gone over in the Scion project in more detail, but we had basically done a fancy reinventing of ROS. I did incorporate some of these changes into our next robosub project and learned from these mistakes, but this was a fulfilling project to work on in the end.